Refining the design, finalising prototyping and building final robot

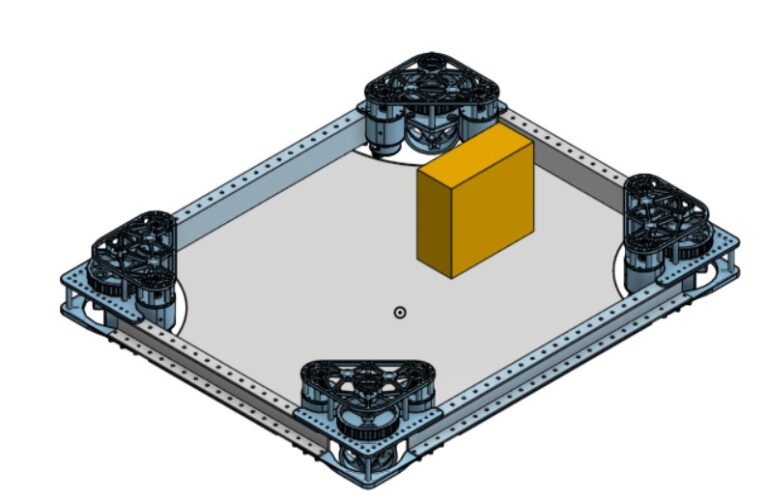

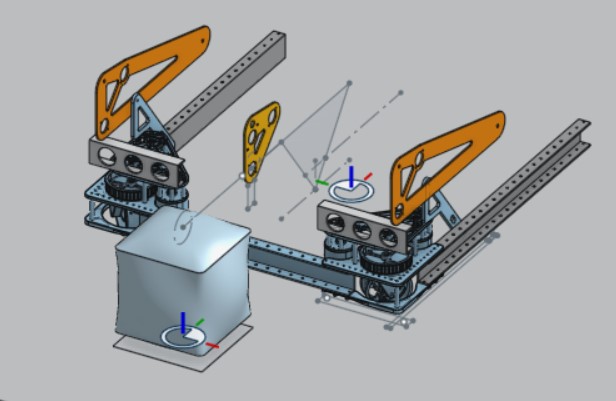

Drivebase & robot assembly

Team has been made improvements on the drivebase to align with the rest of the robot parts such as embracement. Somem updates have been made.

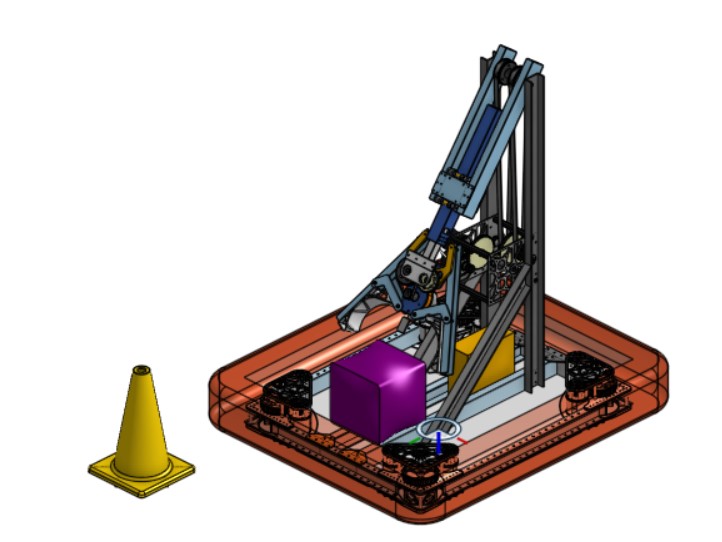

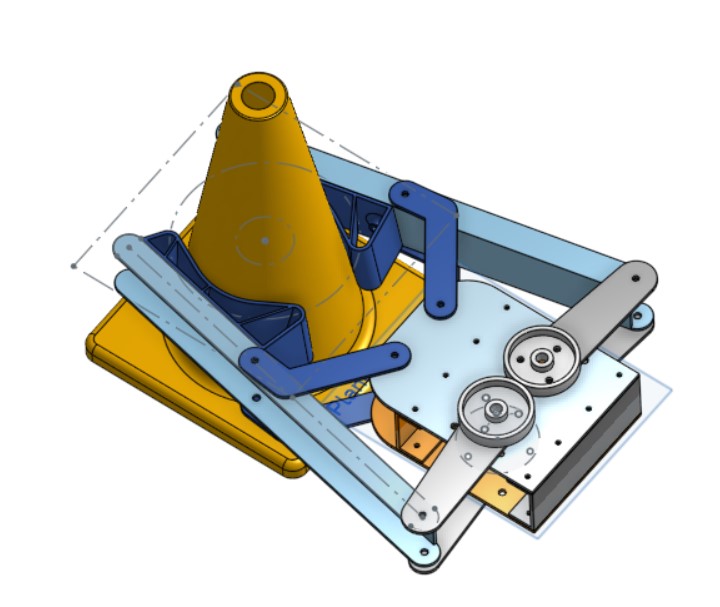

Embracement & Intake

Emplacment has finished the design for a second version of the arm based on what was learnt from the first one. The new one has a single, larger, gearbox for the arm’s rotation which has been fully fabricated and tested, a stroger extension with 2 stages of telescoping extension and a simpler rigging configuration. The rest of the arm is currently being fabricated.

Intake has created a prototype of the cube ground intake which performed well so have now designed and fabricated a real one.

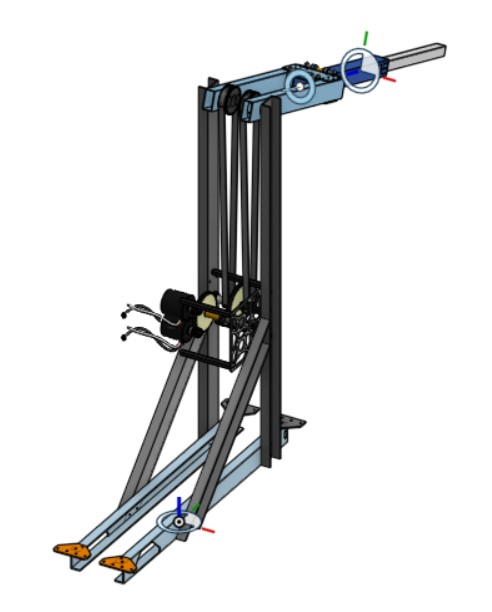

End effector

The End effector team has finished the second version of the claw with a single larger cylinder and have added a limit switch to detect if it is holding a cone or cube and close automatically, a few different materials and shapes of the claw were also tested.

Software

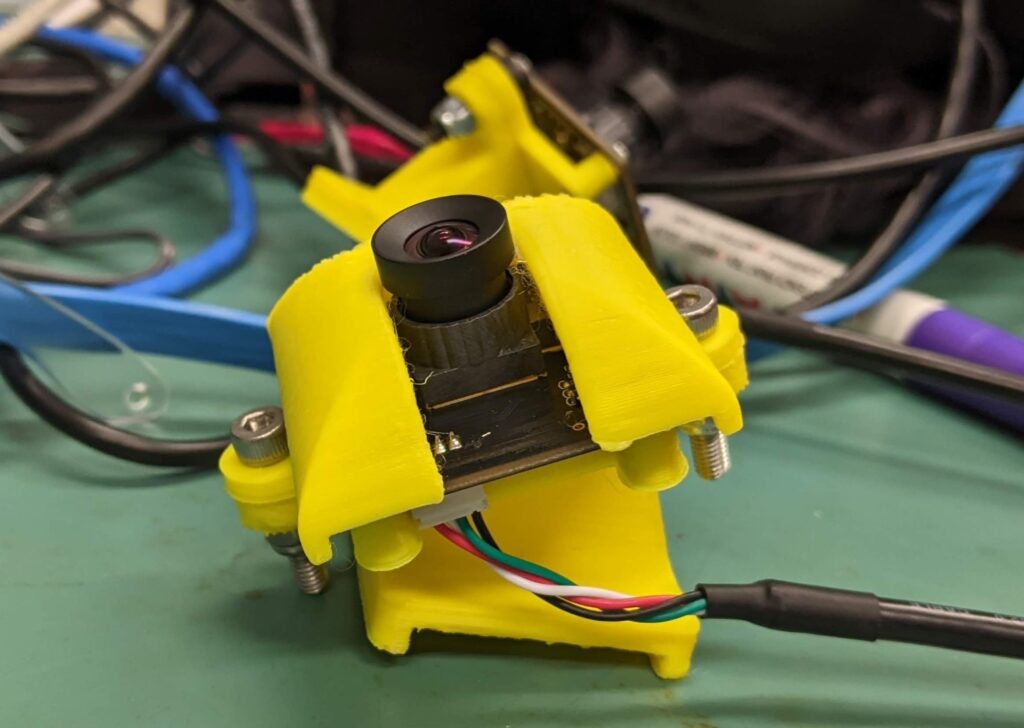

Much work has been done on the vision system and getting the control system on the robot.

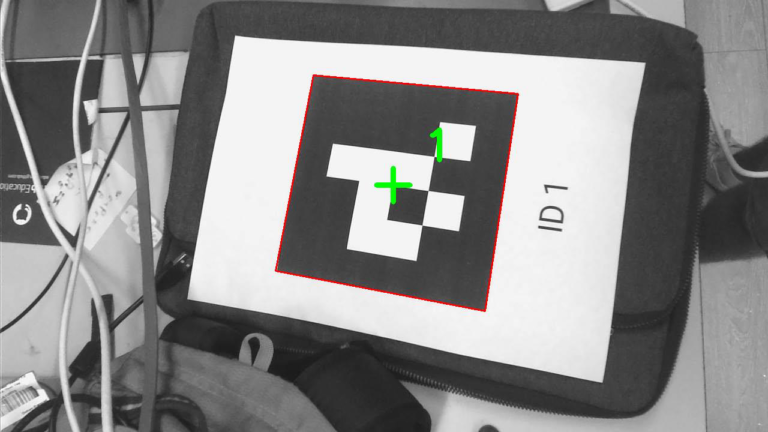

AprilTags used on 2023 FRC field are a type of fudicial markers – easily recognisable patterns that are used for visual localisation. A singular AprilTag doesn’t carry much information – it only encodes a single number, of which 1 to 8 are seen on the field. Much redundancy that is contained in the AprilTag – out of all 65536 (2^(4*4)) possible 4×4 binary images, only 30 are valid 4×4 AprilTags – this is due to the tag layout being restricted to not containing elements otherwise frequently appearing in images, such as plain rectangles, and being as different from each other (high Hamming distance) as possible. In turn, this make automatic recognition of valid AprilTags relatively easy and very reliable.

After the vision pipeline, software called Photonvision and running on a coprocessor, detects an AprilTag, it reports the number it encodes and its 3D position relative to the camera (which can be calculated from in-frame positions of its corners and intrinsic parameters of the camera). The RoboRIO afterwards gets the predicted position of the robot on the field, knowing in advance the field-relative positions of the tags (from the set of which is selects an appropriate one based on the reported number), robot-relative position of the camera, and doing some linear algebra.